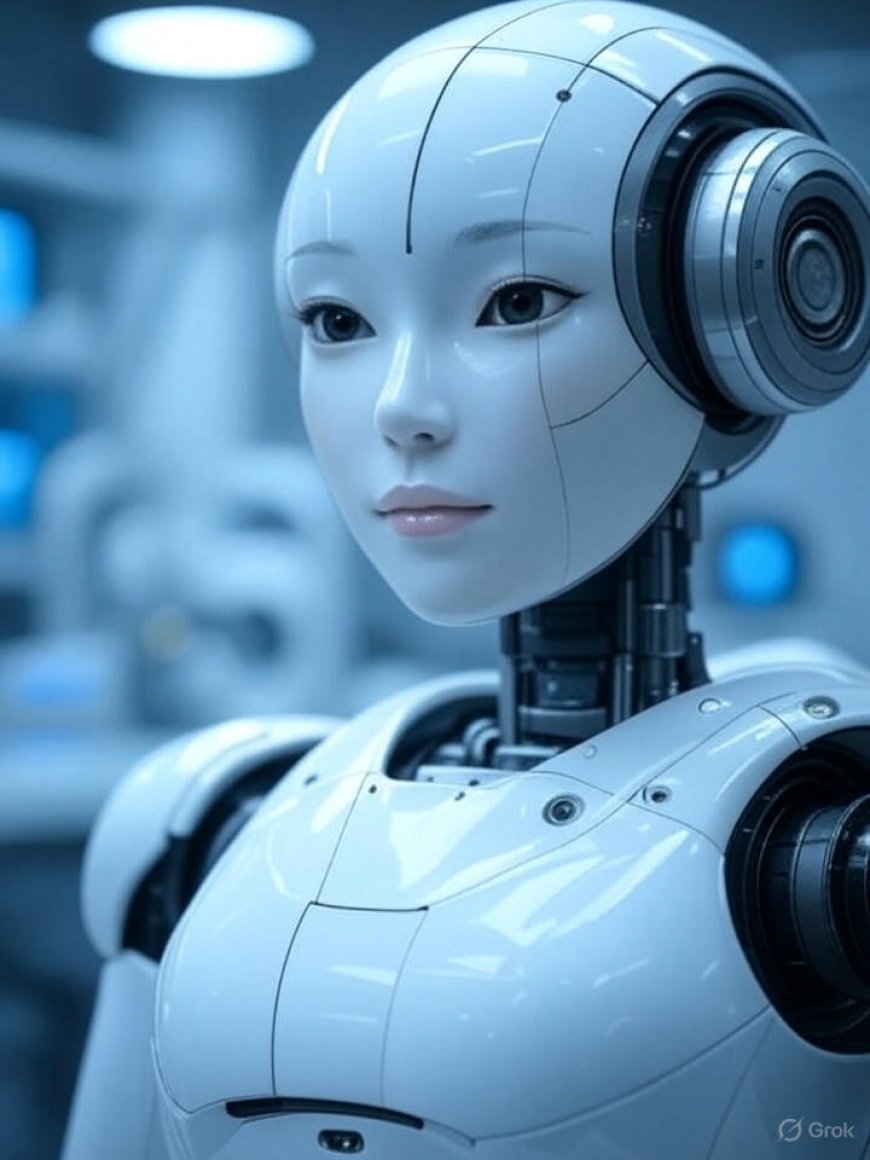

Exploring the History of Artificial Intelligence

Explore the fascinating history of artificial intelligence, from ancient myths to modern breakthroughs in deep learning and NLP.

Artificial Intelligence (AI) has become one of the most transformative technologies of the 21st century. From intelligent assistants to autonomous vehicles and predictive analytics, AI is changing the way we live, work, and think. However, to truly appreciate its impact today, we must delve into the history of Artificial Intelligencea journey that began centuries ago, rooted in philosophy, mathematics, and human curiosity.

1. The Philosophical Foundations of AI

The earliest concepts of artificial beings date back to ancient mythology. The Greek tale of Talos, a giant bronze man created to protect Crete, and the Golem of Jewish folklore are symbolic of humans' fascination with creating intelligent beings.

In philosophy, thinkers such as Ren Descartes and Gottfried Wilhelm Leibniz posed early questions about logic, reasoning, and whether human thought could be replicated by machines. These philosophical inquiries laid the groundwork for what would later become known as AI.

2. Mathematical Foundations and the Birth of Computing

The 19th century saw significant progress in logical reasoning. Mathematicians like George Boole developed Boolean algebra, essential for computer logic.

But it wasnt until the 20th century that the idea of artificial intelligence started becoming tangible. In 1936, Alan Turing proposed the concept of a universal machine, which could simulate any process of mathematical deduction. This concept, known today as the Turing Machine, was a theoretical breakthrough that laid the foundation for modern computing.

"Instead of trying to produce a program to simulate the adult mind, why not rather try to produce one which simulates the childs?"

3. The Birth of Artificial Intelligence (1950s)

The term Artificial Intelligence was officially coined in 1956 at the Dartmouth Conference, organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon. This conference marked the official beginning of AI as a field of academic study.

During this period, AI researchers developed basic algorithms that could solve problems, prove theorems, and even play games like chess and checkers. One notable program was Logic Theorist (1955), developed by Allen Newell and Herbert A. Simon, which was able to prove mathematical theorems.

4. The Early Optimism and First AI Winter (1960s1970s)

Encouraged by early successes, scientists predicted that human-level AI would be achieved in just a few decades. Governments and institutions poured money into AI research.

However, the reality was more complex. Early systems could only solve problems in very limited domains. They lacked understanding, memory, and adaptability. Funding decreased, and progress slowed. This period of disillusionment became known as the First AI Winter.

5. Expert Systems and the Rise of Rule-Based AI (1980s)

AI experienced a resurgence in the 1980s with the emergence of Expert Systemsprograms that used rules to mimic the decision-making abilities of human experts.

A famous example was XCON, developed by Digital Equipment Corporation to configure computer systems automatically. Businesses began investing in AI once more, leading to commercial applications in finance, healthcare, and manufacturing.

However, Expert Systems also faced limitations: they were expensive to maintain, brittle, and unable to learn from experience. This led to a second decline in interest, often referred to as the Second AI Winter.

6. Machine Learning Revolution (1990s2000s)

The 1990s brought a new focus: Machine Learning (ML), a subfield of AI where algorithms improve automatically through experience. Instead of hard-coded rules, systems began learning from data.

Key innovations included:

-

Decision trees and neural networks

-

Support Vector Machines (SVM)

-

Natural Language Processing (NLP) models

One landmark achievement was IBMs Deep Blue, which defeated world chess champion Garry Kasparov in 1997. This was a defining moment for AI in competitive gaming and strategic thinking.

7. Deep Learning and the AI Boom (2010sPresent)

The 2010s marked the golden era of Deep Learning, powered by advancements in hardware (like GPUs), data availability, and improved algorithms. Deep learning mimics how the human brain works by using artificial neural networks.

In 2012, a deep learning model developed by Alex Krizhevsky (AlexNet) won the ImageNet competition with unprecedented accuracy, setting the stage for explosive growth in AI applications.

Breakthroughs followed in:

-

Speech recognition (Siri, Alexa, Google Assistant)

-

Computer vision (facial recognition, self-driving cars)

-

Language models like OpenAIs GPT series

-

Healthcare, with AI detecting diseases faster than doctors

-

8. Timeline of Key AI Milestones

| Year | Event | Description |

|---|---|---|

| 1950 | Turing Test | Alan Turing proposes a test to measure machine intelligence |

| 1956 | Dartmouth Conference | AI officially becomes a field of research |

| 1972 | SHRDLU | AI system simulates understanding of language in a virtual world |

| 1980 | Expert Systems | Rise of rule-based AI for business |

| 1997 | Deep Blue | Defeats world chess champion |

| 2012 | AlexNet | Deep Learning wins ImageNet challenge |

| 2020 | GPT-3 | Natural language model with 175B parameters |

| 2023 | ChatGPT | Conversational AI becomes widely accessible |

9. Modern Applications of AI

AI is no longer a laboratory conceptits now an everyday tool.

-

Healthcare: AI helps in diagnostics, drug discovery, and robotic surgery.

-

Finance: Fraud detection, risk management, and algorithmic trading.

-

Retail: Personalized shopping experiences and customer support.

-

Transportation: Autonomous vehicles and route optimization.

-

Entertainment: AI-generated art, music, and content recommendations.

10. The Future of Artificial Intelligence

As we move forward, the focus is shifting towards Responsible AIbuilding systems that are ethical, explainable, and unbiased. Key research areas include:

-

General AI (AGI): Systems that possess human-level reasoning

-

Neurosymbolic AI: Combining neural networks and symbolic reasoning

-

AI Ethics: Ensuring fair and inclusive algorithms

-

Human-AI Collaboration: Enhancing rather than replacing human roles

"The pace of progress in artificial intelligence is incredibly fast. Unless you have direct exposure to groups like DeepMind, you have no idea how fastit is growing exponentially."

Conclusion

From ancient myths to cutting-edge algorithms, the history of artificial intelligence is a fascinating journey through human ingenuity. As AI continues to evolve, understanding its origins helps us navigate its future with wisdom, caution, and optimism. With responsible development, AI holds the potential to enhance nearly every aspect of lifeturning once-science-fiction dreams into everyday reality.

Read More.. History of Artificial Intelligence